.

.

Quantum

computing has gotten a ton of press, due to its enormous

theoretical promise--essentially, that you can parallelize your

computations across parallel

universes.

Unfortunately,

it's possible that like fusion power, which is theoretically

superior to all other forms of power generation but currently

seems intractible in practice, quantum computing is "the

technology of the future, and always will be". It's also

possible the laws of physics constraint quantum computers to

impractically small size--we don't know enough about the coherence

collapse process to say for sure.

| Ordinary Computer | Quantum Computer | |

| Goal | Get 'er done! | Speedups up

to exponential: e.g., search n values in sqrt(n) time, factor integers in polynomial time. |

| Data storage | 1's and 0's (bits) | Vector with axes 1 and 0

(qubits) Not just 1 or 0: both at once (Hadamard gate) |

| Assignments? | Yes | No (quantum "cloning" violates laws of physics) |

| Reversible? | No (e.g., assignments) |

Yes, except for "measurement"

operations, which cause wavefunction collapse. |

| Swap? | Yes | Yes |

| Logic gates | AND, OR, NOT | CNOT, Hadamard rotate 45 degrees |

| Programming Model |

Ordinary instructions | Reversible Quantum Gate

Operations, and Irreversible Collapse |

| Clock

Limit |

Switching speed |

Coherence time |

| When? | Now | ??? |

| Hardware Limits | Heat/power |

How many bits can you keep coherent? |

In some

fields, there's a simple and easy to visualize core operation,

concealed beneath layers of confusing and complicated

notation. I'm actually not sure if quantum mechanics is like

that, or if it's confusing and complicated all the way down!

You can get a decent feeling for the process of quantum gate

design using a simulator

like jQuantum, by Andreas de Vries (download the .jar, since

applets are now forbidden unless you're on HTTPS and have a

certificate). It makes very little sense unless you are

working through a good set

of example quantum circuits.

Many things, like photons or electrons, display several very odd mechanical properties with mystical sounding quantum names. The key point here is that everything is made of waves.

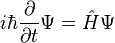

The wave

equation for a particle is the Schrödinger

equation  .

.

Restating this equation in small words, "a particle's wave changes with time depending on the particle's energy."

I prepared some photographic examples of wave properties visible in laser light. Waves are very strange; see IBM's atomic force microscope images of electrons bouncing off atoms, compare to the Falstad ripple tank applet (again, download the .jar, because applets are dead) for an interactive version.

A quantum computer is based on "qubits", which you can think of as a dot in 2D space: the X axis means a "0", the Y axis means a "1". Normal bits must lie on one axis or another, but qubits can live between the axes. For example,

Since coordinates (0,0) means "not a 0, and not a 1", and we don't know what that means, we usually require the qubit to live on a unit sphere--it's either going to be a zero or going to be a one, so the probabilities (equal to the square of the amplitude) must add up to one.

There are many

ways to implement qubits, such as via photon polarization, where

"0" means horizontally polarized, "1" means vertically polarized,

and a superposition is unpolarized. You could also implement

the qubits as possible positions of an electron, where "0" means

in the left box, "1" in the right box, and superposition means

it's sitting in both boxes at once. D-Wave

represents qubits as magnetic fields generated by a current

loop in a superconductor; "0" means pointing north, "1" means

pointing south, and superposition means both at once.

Tons of

research groups have built single-bit quantum computers, but the

real trick is entangling lots of bits together without premature

"collapse", which seems to be a lot harder to actually pull off,

typically requiring the system to be electrically isolated,

mechanically isolated from vibrations, and kept near absolute zero

(e.g., D-Wave runs at 20 milliKelvin = 0.02 K). Collapse is

the single biggest limiting factor in building useful quantum

computers.

Just like a

classical computer uses logic gates to manipulate bits, the basic

instructions in a quantum computer will use quantum logic

gates to manipulate individual wavefunctions.

Because you don't want to destroy any aspect of the data (this

causes decoherence), you can represent any quantum logic gate with

a rotation matrix (a unitary matrix).

I think of a qubit like a little vector. One qubit is

2-float vector representing the amplitudes for zero and one:

| a=0 |

| a=1 |

A "Pauli-X" gate is represented by this 2x2 rotation matrix:

| 0 | 1 |

| 1 | 0 |

Plugging in the input and output probabilities, we have:

| a=0 | a=1 | |

| output a=0 | 0 | 1 |

| output a=1 | 1 | 0 |

The amplitude for a=1 on the input becomes the amplitude for a=0

on the output, and vice versa--this is just a NOT gate!

A controlled NOT takes two bits as input. Two qubits makes a 22=4 float vector with these components:

| a=0 && b=0 |

| a=0 && b=1 |

| a=1 && b=0 |

| a=1 && b=1 |

The CNOT gate's matrix is basically a 2x2 identity, and a 2x2 NOT

gate:

| 1 | 0 | 0 | 0 |

| 0 | 1 | 0 | 0 |

| 0 | 0 | 0 | 1 |

| 0 | 0 | 1 | 0 |

Again, putting in the input and output vectors, we can see what's

going on:

| a=0 | a=1 | |||

| b=0 | b=1 | b=0 | b=1 | |

| a=0 && b=0 | 1 | 0 | 0 | 0 |

| a=0 && b=1 | 0 | 1 | 0 | 0 |

| a=1 && b=0 | 0 | 0 | 0 | 1 |

| a=1 && b=1 | 0 | 0 | 1 | 0 |

If a=0, nothing happens--b's probabilities are exactly like

before. If a=1, then b gets inverted, just like a NOT gate.

If a is in a superposition of states, b is in a

superposition of being inverted and not being inverted.

The basic programming model with a quantum computer is:

People started to get really interested in Quantum Computers when in 1994 Peter Shor showed a quantum computer could factor large numbers in polynomial time. The stupid algorithm for factoring is exponential (just try all the factors!), and though there are smarter subexponential algorithms known, there aren't any non-quantum polynomial time algorithms known (yet). RSA encryption, which your web browser uses to exchange keys in "https", relies on the difficulty of factoring large numbers, so cryptographers are very interested in quantum computers. In 1996, Lov Grover showed an even weirder result, that a quantum search over n entries can be done in sqrt(n) time.

At the moment,

nobody has built a popular, easy to use quantum computer, but

there are lots of interesting experimental ones. The biggest

number a quantum computer has factored is 21 (=3*7, after piles

of science; see readable poster

description of process). But *if* the hardware

can be scaled up, a quantum computer could solve problems that are

intractable on classical computers. Or perhaps there

is some physical limitation on the scale of wavelike effects--the

"wavefunction collapse"--and hence quantum computers will always

be limited to a too-small number of bits or too-simple circuitry.

The big research area at the moment is "quantum error

correction", a weird combination of physics-derived math,

experimental work on optics benches, and theoretical computer

science. At the moment, nobody knows if this scaling of

hardware and algorithms will combine to enable useful computations

anytime soon.

There's a different "Adiabatic Quantum Computer" design used by

British Columbia based quantum

computer startup D-Wave that they hope will scale to

solve some large problems. The adiabatic part of the name

refers to the adiabatic

theorem, proved in 1928, that says a system in quantum

ground state, moved slowly (adiabatically) to a new state, is

still in ground state. In practice, this allows the quantum

solution of reasonably large problems, up to 512 bits on their

current hardware, but only those that fit this model and the

company's choice of wiring. This is not a general-purpose quantum

computer, but it has shown speedups on real-world optimization

problems. They published some results in Nature (described

here), and have some fairly big contracts from Amazon,

Lockheed Martin, Google, etc. D-Wave raised

another $29 million at the end of 2014, so somebody thinks

they're on to something useful.

Basically every big tech company has some people working on quantum computing, from Microsoft to Google.

How do we deal with the cryptographic

threat from quantum computers?