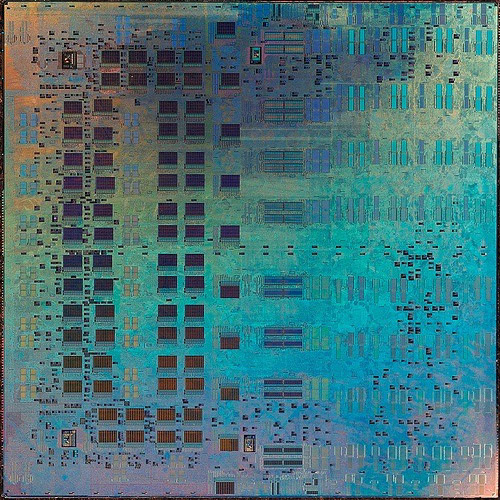

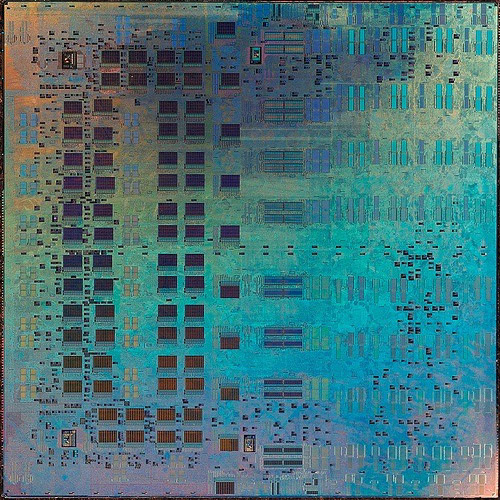

Trips Die Prototype Toren Smith, 2007 CC-BY-SA-3.0

Tera-op, Reliable, Intelligently adaptive Processing System

The TRIPS project is a new experimental architecture with the goal of evaluating trillions of instructions per second by etching many simple and identical computing cores onto the same wafer of silicon. TRIPS CPUs use Explicit Data Graph Execution (EDGE) to achieve its parallelism. A major design goal of the TRIPS project is to provide a design which scales to hundreds or even thousands of identical cores on a single chip while at the same time not requiring programs to be necessarily written to explicitly take advantage of these cores. The TRIPS project hopes to reach one TFLOPS on a single processor by 2012.

In CPU designs that use superscalar instruction-level parallelism, speed-ups are achieved by parallelizing instructions which are not dependent on the results of computations which haven't been performed yet. Performing this dependency analysis on the CPU at runtime makes superscalar machines a dynamic placement design. The look-ahead resulting from this capability is often small, usually about 3 or 4 instructions, and even this requires very complicated circuitry to achieve. Because the instructions to be executed are not known ahead of time, superscalar machines are also considered a dynamic issue design.

The SIMD approach to parallelism, meanwhile, operates on multiple blocks of the same type of data at the same time. This approach adds even more specialized circuitry to CPUs because each of the vector operations needs multiple arithmetic and logical units for each of the data types which is to be computed in parallel.

Intel's Itanium line uses the idea of very long instruction words (VLIW) in order to achieve out-of-order execution. VLIW is a static placement, static issue design, meaning that both the data dependencies between instructions and the scheduling particulars are figured out ahead of time by the compiler and not by the CPU. Unfortunately for static issue proponents, predicting whether or not memory is in cache and the rise of cache sizes makes using static issue effectively very difficult and error-prone.

EDGE uses both static placement and dynamic issue to get the benefits of detailed dependency analysis while avoiding the difficulties posed to static placement in predicting latency. Under the EDGE design, source code is broken up into hyperblocks which are explicitly encoded and optimized to solve for local dependencies. Source code is already organized into hierarchical closures and subroutines with well-defined entry and exit points, so the data to perform this translation is already available to many compilers. These hyperblocks are scheduled dynamically based upon what data is available. This approach to computation is akin to a kind of lazy evaluation.

Trips Die Prototype Toren Smith, 2007 CC-BY-SA-3.0