The World's Second Computing Architecture:

The Brain

Why Neurons?

Neruons evolved in response to the need for faster inter-cellular communication in mobile large multi-cellular organisms. With chemical diffusion signaling and round cells, communication times between cells depends greatly on distance, and is simply too slow in large critters. Here's an (entirely hypothetical) example of the type of trouble you can get into if you lack neurons:

Signal Processing in a Neuron[1]

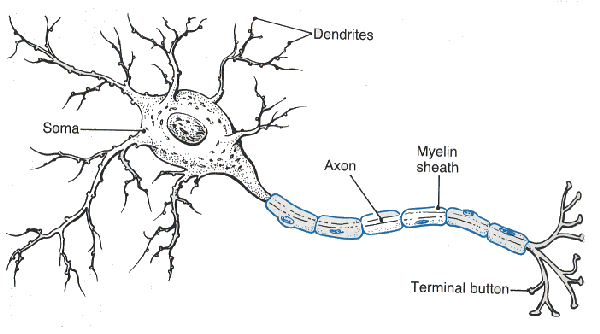

The neuron fixes the communication problem with cell body attached to long dendrites (inputs) and a very long axon (output). This allows signals to propagate as a electric potential wave along the membrane of a single neuron, rather than jumping from cell to cell via diffusion.

Anatomy of a Neuron:[2]

Signal Activation

A signal (neuron activation spike) originates at the base of the axon in response to activation of the dendrites. The inside of a neuron is held at a constant rest potential (usually around -70 mV) by potassium and sodium ion pumps in the membrane (sodium(NA+) is kept outside, potassium inside(K+)). This potential can be altered by nearby electric fields (if other sections of the membrane are spiking) or by transmitter compounds binding to the ion pumps. When the membrane potential rises above a certain threshold potential, voltage-controlled sodium channels open in the membrane and sodium (positively charged) diffuses into the cell, further raising the voltage. When the sodium gradient has disappeared, the peak voltage has been reached and the sodium channels close while the potassium channels open. Positively charged potassium rushes out of the cell, and the potential returns to rest potential, at which point sodium and potassium gradients are re-established for the next pulse. See a nifty animation of the whole process here.

Signal Propagation

Once an activation spike has begun, it travels down the neuron as a wave. However, this wave relies on ions moving across the membrane, so is still relatively slow (~1 m/s). Neurons with any significant length to their axon have a Myelin sheath around sections of the axon. This sheath makes the membrane impermeable and raises its resistance, allowing the action potential to propagate electrically. The myelin is punctuated by sections of open membrane to regenerate the signal. Signals in myelinated axons can travel between 18 and 120 m/s, depending on the width of the axon. There's a cool animation of signal propagation here.

Signal Transmittance

When an activation spike reaches the end of the axon, it is transmitted to other neurons by chemical signals that cross the synaptic gap. The action potential stimulates the release of vesicles filled with neuro-transmitting chemicals, which then bind to the ion pumps on the neighboring neuron. These transmitters can be excitatory or inhibitory, and the amount of transmitters released can be varied to vary the strength of the signal. The process can be seen in another cool animation.

Varying Signal Strength

Neuron peak activation voltage is always the same, so signal strength has to be encoded in some other manner. As the activation of a neuron increases, the frequency of spike activation pulses increases. How activation affects spike frequency depends on the type of neuron: burst neuron respond with high frequency bursts followed by a pause, whereas single spike neurons produce spikes at regular intervals.

Advantages and Disadvantages of Biological Neural Networks

What this all boils down to is that the brain is an exceedingly complicated structure. With roughly 100 billion non-deterministic neurons (of multiple types!) in the human brain, connected through perhaps 100 trillion synapses,[3] the task of fully modeling brain computation is beyond modern mathematics.[4] This holds true even for much smaller networks!

Advantages

Massively parallel – perfect for pattern matching and dealing with incomplete data

Non-determinism allows organisms to avoid getting stuck in infinite loops (though it can still happen)

Built-in learning mechanisms allow adaptation without explicit knowledge of problem solutions

Disadvantages

Slow response time – Individual neurons can fire at most once every 10 ms or so.

Low throughput for serialized algorithms

Biological Neural Networks – Uses in Computer Science

The two properties of biological neural networks we most often want to use in CS are the massively parallel processing and learning. To use these we can either try to use biological neurons themselves, or simulate them in hardware or software.

Harnessing Biological Neural Networks

Cultured Neural Networks

In recent years many research groups have focused on culturing neurons around electrodes. By reading and applying voltage from and to the electrodes, scientists can allow the neurons to interact with some environment and train them to particular tasks.

One such study headed by Thomas Demarse at the University of Florida, involved 25,000 rat neurons cultured around 60 electrodes and trained to act as an autopilot in a flight simulator. Two of the electrodes allowed the network to control the pitch and yaw of the plane, while others allowed the network to sense the current trajectory and orientation of the plane. Network responses were trained to particular inputs by applying high and low frequency stimulation to network's output nodes, which previous studies had shown respectively lower or raise the network activation at that particular site. A pdf version of the paper can be found here.

Rat Controller for Virtual Avatar

In 2001, Demarse's group also hooked up a similar rat neuron contraption to a simulated environment, and allowed it to control an avatar within a virtual room. Feedback was provided to the network about collisions with its virtual environment and its movement through the environment, but no method for explicitly training the network from the feedback was known at the time. Read about it here.

Rat brains aren't the only option for electronically interface biological neural networks! Demarse and Bill Ditto (also of the University of Florida) recently announced results using leech neurons for performing logic operations. Leech neurons are large enough that they can be controlled and linked individually. By taking two neurons and individually controlling the resting potential, Demarse and Ditto were able to create NOR gates, which they then used to composite simple adders, multipliers, and other operations using digital design techniques. Paper is here.

Hurdles for Cultured Neural Networks

Lack of training techniques: Little is known about training cultured networks to specific tasks. A few methods are posited in the above papers, but reinforcement learning is still not nearly as effective as in complete organisms.

Bursting: Trained cultured networks tend to overshoot their response goals, and end up sending network-wide burst of signals for periods on the order of seconds. This prevents the network from responding to further input while bursting is happening. Bill Ditto has also done some research in this area, using chaos-control techniques to analyze the bursting behavior of network and stimulation to move networks away from unstable bursting conditions.

Interfaces: Current electrode interfaces are crude – useful for limited input and output, but not for finer control. Resent developments in carbon nanotube manufacturing suggest the nanotube arrays could be used to interface individual neurons in a network.

Disadvantages of Cultured Neural Networks

Hard to make/maintain/train

Code non-transferable! New cultured networks would have to be grown and trained for each interested party.

Simulating Neural Networks – Artificial Neural Networks

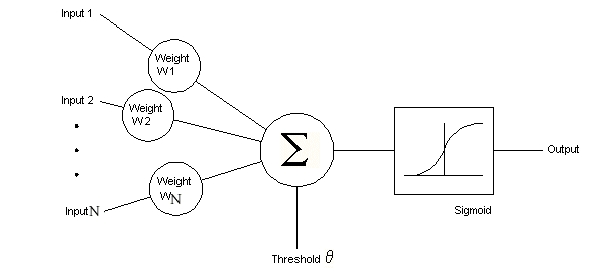

Rather than rely on biological neural systems, we may also simulate networks in software or electronic hardware using a simplified version of the neuron:[5]

A node in an artificial neural network simply computes the weighted sum of its input activations, and runs the result through a sigmoid function (a non-linear function is required here, or we loose the ability to easily match non-linear situations). The threshold of the sigmoid refers to its point of odd symmetry. Networks are set up as a graph of connected nodes. Typically feed-forward networks are used, meaning that signals move through successive layers of nodes from the inputs to the output without any feedback loops:[6]

For

a more complete description of how the ANN abstraction works, check

out this

site.

Training ANNs

Despite being simpler than biological networks, artificial neural networks are still extremely non-linear and would be an enormous pain to program directly (and that would defeat the whole purpose!). Fortunately their exist a multitude of algorithms to train ANNs (by adjusting the weights and maybe thresholds).

Supervised Learning is possible when the correct responses are known for a certain set of data, and we wish to train the network to match the data. Supervised Learning is achieved by altering weights in response to the networks output for a given training datum, via some algorithm such as backpropagation. This approach is often used in pattern matching problems, such as image processing and character recognition.

Reinforcement Learning is useful when we know the results of a networks action (generally the effect on its environment) and we wish to encourage certain results. A good method for reinforcement learning is to evolve populations of networks, keeping and randomly modifying networks that produce better results. Reinforcement learning is useful for development networks for environmental interaction or gameplay.

For more information on network training techniques, check out wikipedia.

How Much Can ANNs Compute?

It turns out that ANNs are actually Turing complete! Here's a simple NAND gate using a single node ANN:

If

A and B are both 1, then the activation on the node is -8, so a 0 is

output. Otherwise, the activation is either -4 or 0, both of which

are above the threshold, and so the output is 1. Since NAND gates

can be used to construct Turing complete machines, so too can ANNs!

Note that this does not extend to biological neural networks, since

the biological networks are non-deterministic.

Implementing ANNs in Hardware

Since the late 80s scads of companies have attempted to implement artificial neural in hardware (and many have succeeded). These implementations are rarely of the generic ANN abstraction described above, but rather usually some variation designed with a specific application (or type of training) in mind.

Among the different variations implemented are:

probabilistic models (pram 256)

spiking neuron models

radial basis function activation (uses a peak function instead of a sigmoid function in nodes)

Typically, implementations are severely limited in network size, since hardware requirements grow at least with the square and by as much as the cube of the network dimensions.

Since precision is often not an issue in intermediate steps of ANN computations, analog circuitry has also been used for some implementations. Analog circuitry allows space and energy costs to be reduced and speed to be increased. Unfortunately, manufacturing variations mean that weights discovered on one chip may not work on a different chip. Just as in biological networks, the ANN has to be retrained for each chip on which it runs. Generally these chips are used in applications such as pattern matching in particle colliders, so multiple copies of the network are not required.

Examples of Hardware ANNs

ZISC (IBM's Zero Instruction Set Computer)

LCNN (Analog Local Cluster Neural Network Hardware)

Implemented in analog circuitry

designed using clustering and radial basis functions, meaning input runs through several distinct networks and the results compiled into a single output

6 input neurons, 1 output, 8 clusters

The Future of Hardware ANNs

Hardware implementations of artificial neural networks have proved useful in limited fields, but because of the ease of use of software implementations and the specialized nature of hardware implementations, we have yet to see widespread use. As we begin to see more embedded systems with complex processing functions such as speech recognition, face recognition, or complex control, it is possible hardware ANNs will enjoy wider use.

Footnotes

[1] Unless otherwise referenced, material in this section is from The Central Nervous System: Structure and Function (Third Edition) by Per Brodal (2004).

[2] Shamelessly yoinked from http://www.mindcreators.com/NeuronBasics.htm

[3] http://www.nervenet.org/papers/NUMBER_REV_1988.html

[4] Though understanding the neural pathways are beyond modern science, we can still predict something about the behavior of neural networks. This is the job of psychologist and behavioral biologists!

[5] Picture borrowed from http://homepages.gold.ac.uk/nikolaev/311perc.htm

[6] Picture from http://www.dtreg.com/mlfn.htm